Humans commonly work with multiple objects in daily life and can intuitively transfer manipulation skills to novel objects by understanding object functional regularities. However, existing technical approaches for analyzing and synthesizing hand-object manipulation are mostly limited to handling a single hand and object due to the lack of data support.

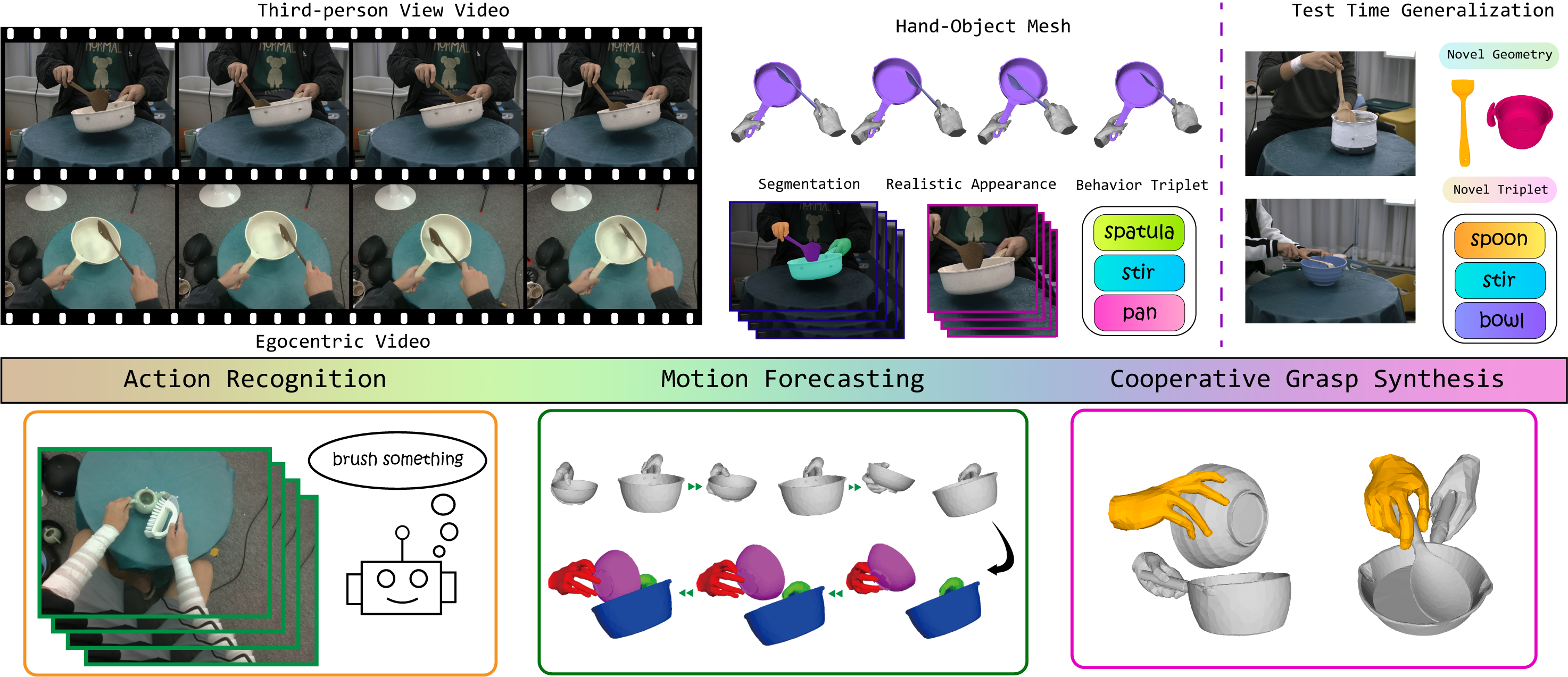

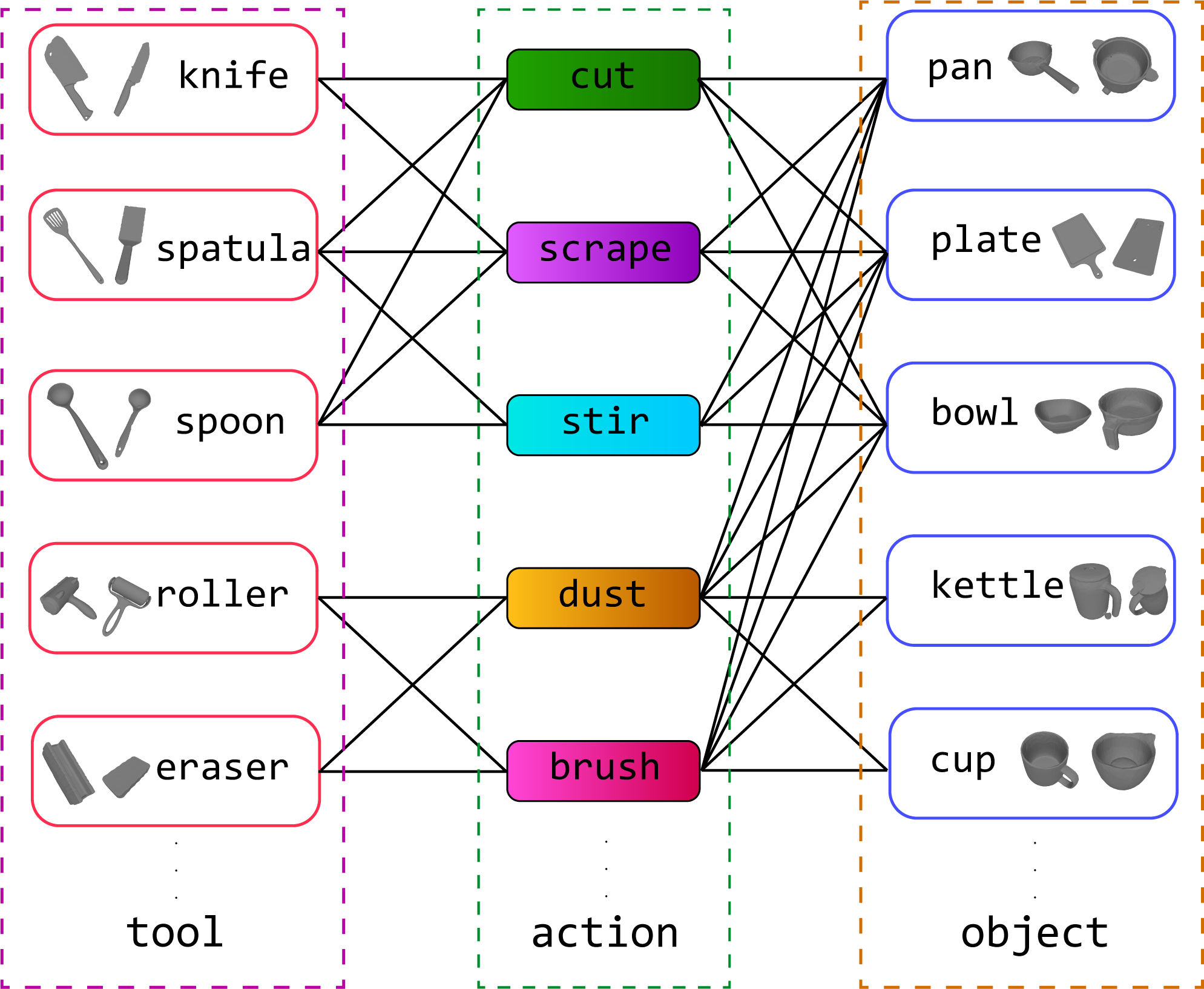

To address this, we construct TACO, an extensive bimanual hand-object-interaction dataset spanning a large variety of tool-action-object compositions for daily human activities. TACO contains 2.5K motion sequences paired with third-person and egocentric views, precise hand-object 3D meshes, and action labels.

To rapidly expand the data scale, we present a fully automatic data acquisition pipeline combining multi-view sensing with an optical motion capture system.

With the vast research fields provided by TACO, we benchmark three generalizable hand-object-interaction tasks: compositional action recognition, generalizable hand-object motion forecasting, and cooperative grasp synthesis. Extensive experiments reveal new insights, challenges, and opportunities for advancing the studies of generalizable hand-object motion analysis and synthesis.